Identifying Customer and Customer Needs

The team conducted interviews with 7 interviews with three types of stakeholders. Post interviews, card sorting was used to create a list of 48 needs.

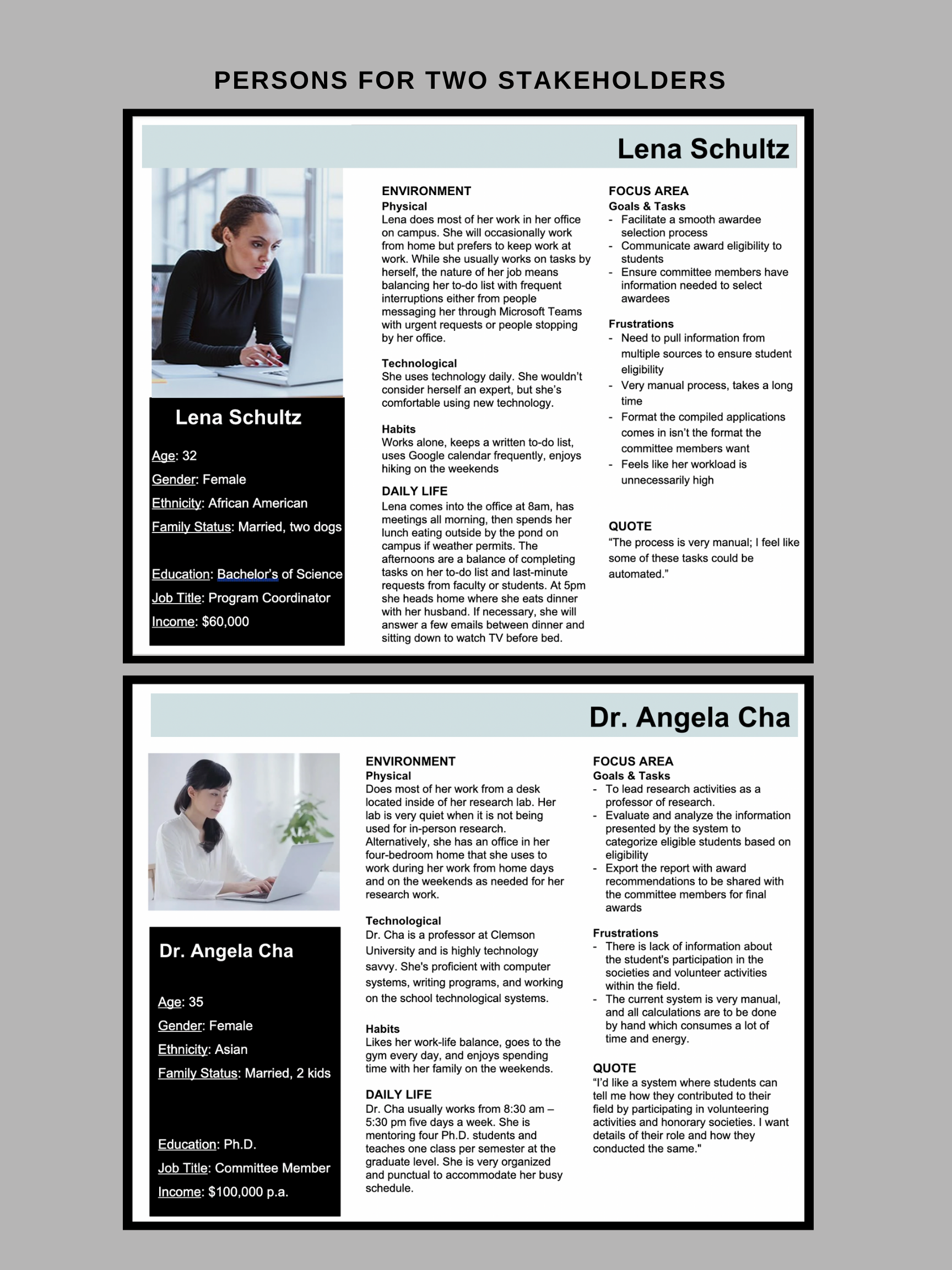

Personas were created for our three stakeholders to understand the user's frustrations, behavior patterns, and demographics.

Target Specifications

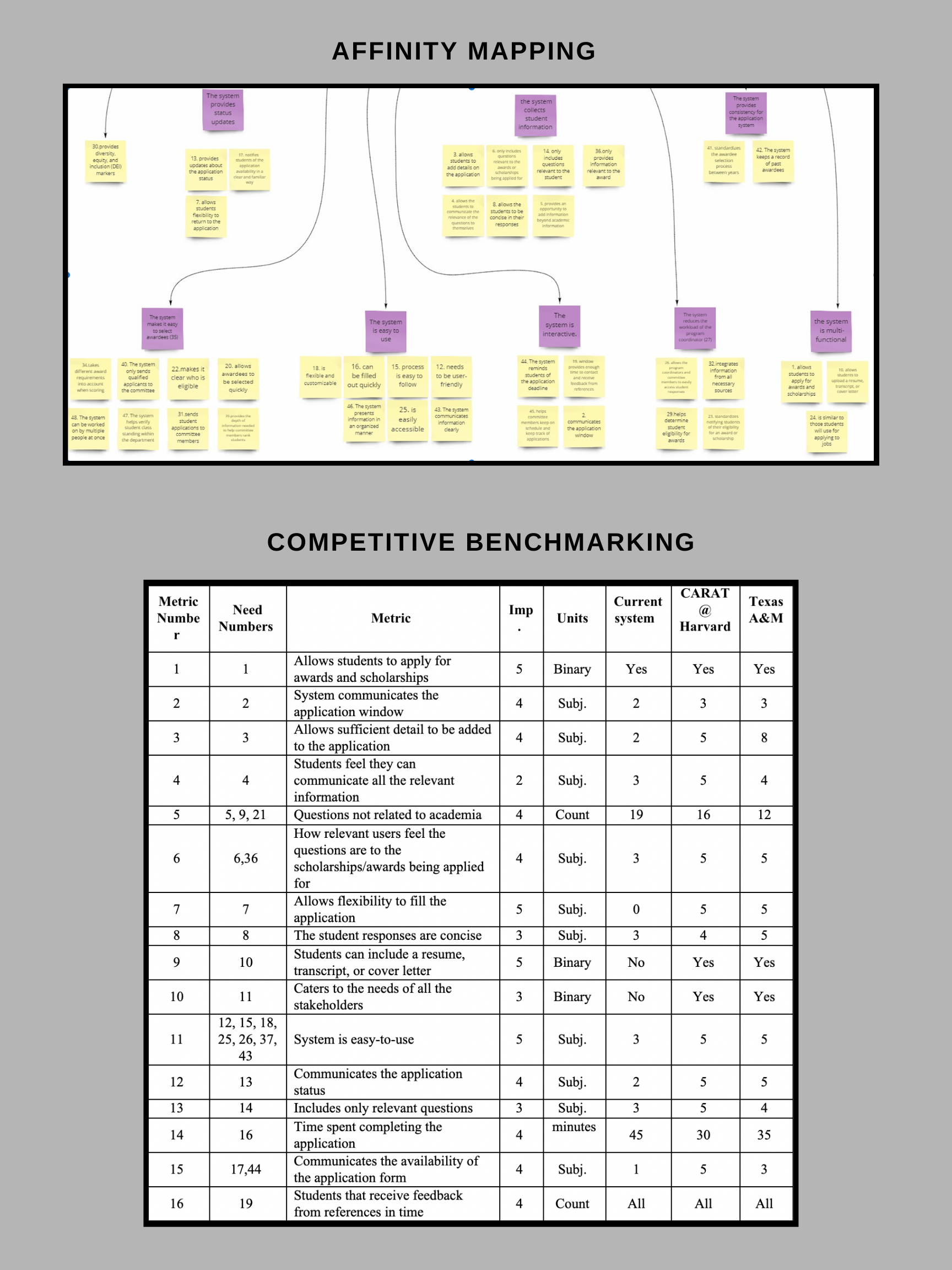

With Affinity mapping a list of the primary and secondary needs was formulated.

A survey with stakeholders led to understand the relative importance of needs.

Next, all the needs were categorized as subjective, objective, or binary, and metrics were associated with them.

We conducted Competitive benchmarking of the Carat@Harvard System and Texas A&M system by researching the internet and interviewing students.

Lastly, ideal and marginal values were compared to the target system's competitors.

Low Fidelity Prototypes, Task analysis and Hueristic Evaluation.

The team generated a set of 50 hand-sketched concepts and two rounds of iterations on them.

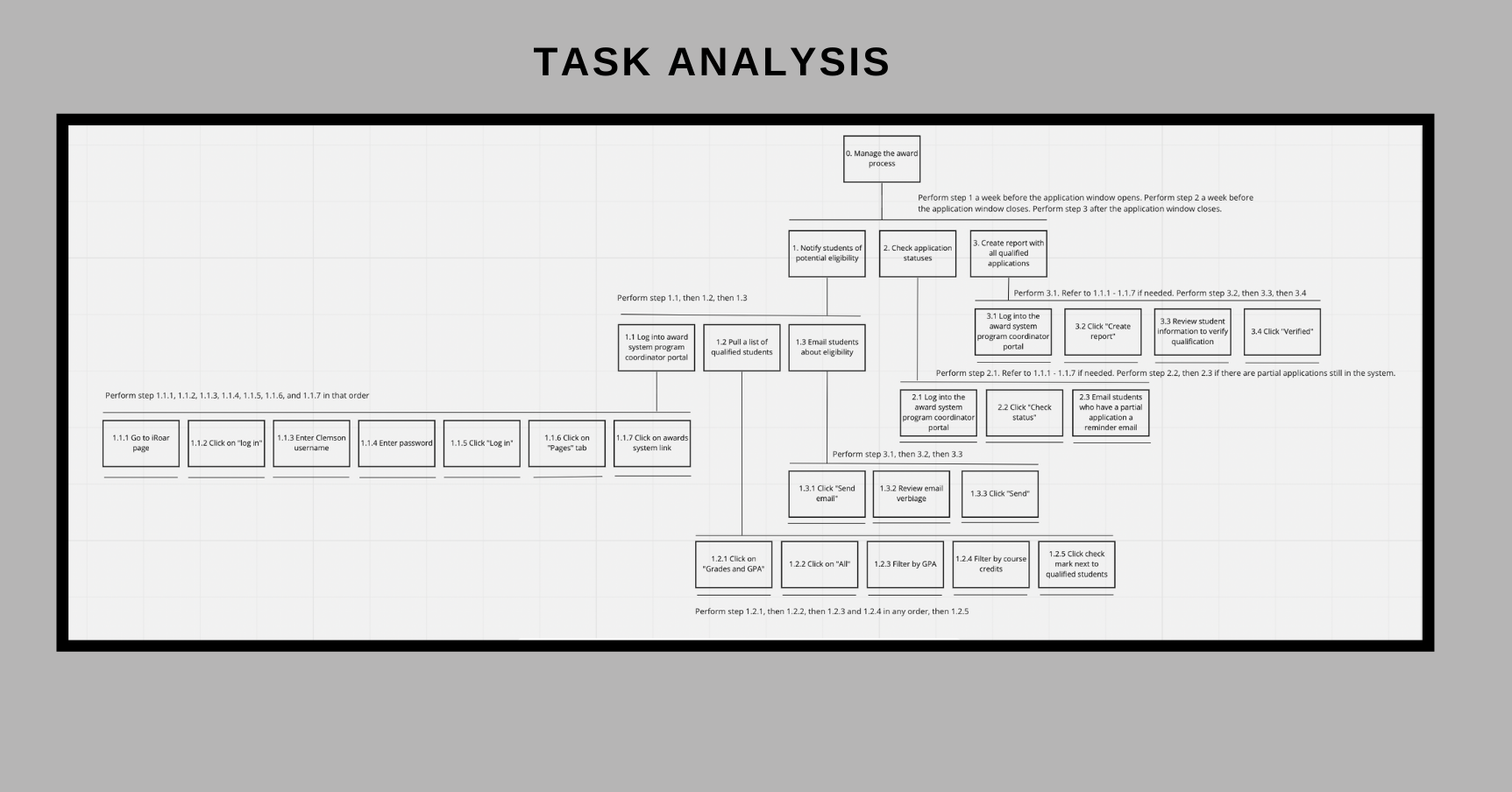

Next a detailed step-by-step task analysis model was created, which ensured that the prototype was in sync with the steps that needed to be performed by stakeholders.

The task analysis model helped us find inconsistencies between the concepts and the stakeholder's actual process. Our team encountered that we needed to create a placeholder for the scoring rubric for the committee members. Hence that was incorporated at this step.

Next, the team conducted individual heuristic evaluations using Jacob Nielson's 10 usability heuristics.

User Testing

After completing the final prototype design, we conducted user testing to understand user experience, usability, and performance workload.

The team selected three user testing objectives

Objective one: Understand user perspective of application portal and user interface

Objective two:Understand award application system usability

Objective three:Understand user mental workload while interacting with the application portal

First, we conducted Think aloud testing and semi-structured interviews with the stakeholders.

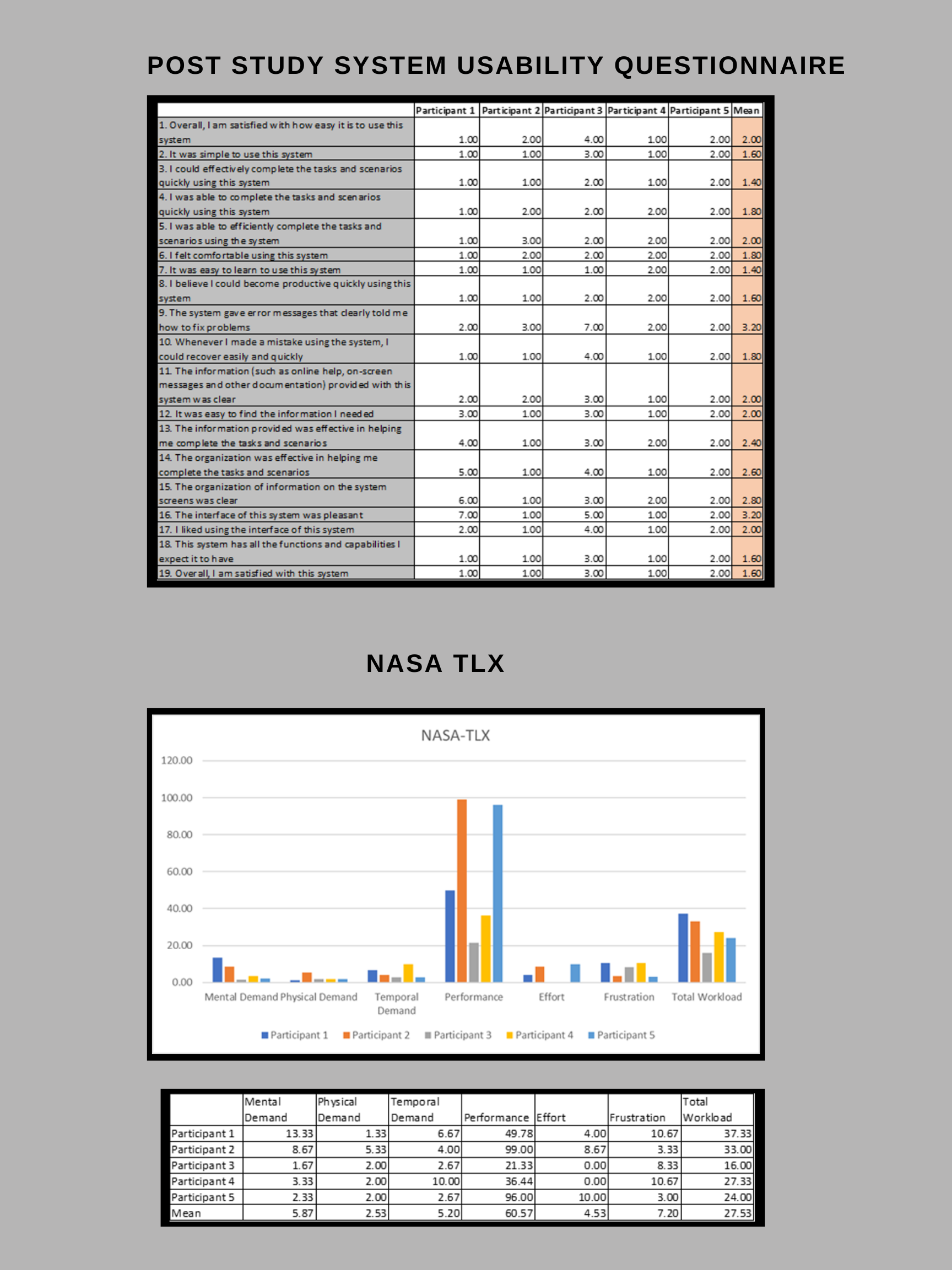

Post the interview; the team sent them survey links to NASA-TLX (Task Load Index) and Post Study System Usability Questionnaire (PSSUQ). These surveys gauged user workload experienced and the perceived usability of the award selection portal, respectively.

Wireframing Tools

What did I learn?

Trust the process

Competitive benchmarking helps in researching what are the trends in similar systems.

Neilson's Heuristics help to eliminate errors

NASA-TLX and PSSUQ provide statistics about user workload and usability of the system